I’ve recently had to perform some web scraping from a site that required login.It wasn’t very straight forward as I expected so I’ve decided to write a tutorial for it.

For this tutorial we will scrape a list of projects from our bitbucket account.

The code from this tutorial can be found on my Github.

Use a Requests session to persist cookies, apply headers and ensure that connection reuse is being performed Do not use a tuple for your prepared statement; use a dictionary and%(keyword)s syntax to avoid tuple misalignment errors. Browse other questions tagged python-3.x web-scraping beautifulsoup python-requests or ask your own question. The Overflow Blog Podcast 330: How to build and maintain online communities, from gaming to.

We will perform the following steps:

- Extract the details that we need for the login

- Perform login to the site

- Scrape the required data

For this tutorial, I’ve used the following packages (can be found in the requirements.txt):

Open the login page

Go to the following page “bitbucket.org/account/signin” .You will see the following page (perform logout in case you’re already logged in)

Python Requests Beautifulsoup

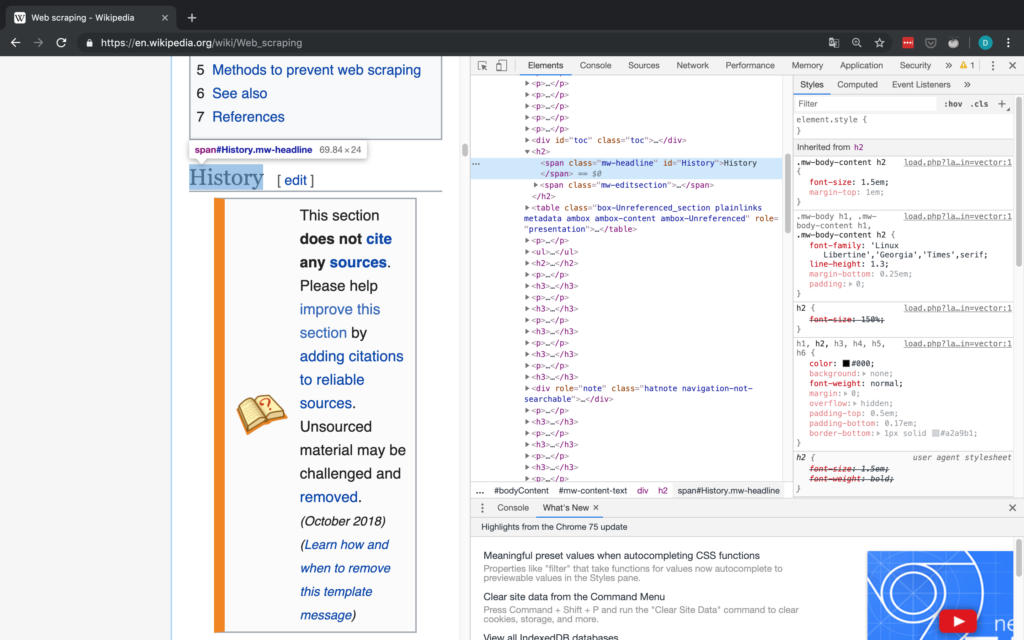

Check the details that we need to extract in order to login

In this section we will build a dictionary that will hold our details for performing login:

- Right click on the “Username or email” field and select “inspect element”. We will use the value of the “name” attribue for this input which is “username”. “username” will be the key and our user name / email will be the value (on other sites this might be “email”, “user_name”, “login”, etc.).

- Right click on the “Password” field and select “inspect element”. In the script we will need to use the value of the “name” attribue for this input which is “password”. “password” will be the key in the dictionary and our password will be the value (on other sites this might be “user_password”, “login_password”, “pwd”, etc.).

- In the page source, search for a hidden input tag called “csrfmiddlewaretoken”. “csrfmiddlewaretoken” will be the key and value will be the hidden input value (on other sites this might be a hidden input with the name “csrf_token”, “authentication_token”, etc.). For example “Vy00PE3Ra6aISwKBrPn72SFml00IcUV8”.

We will end up with a dict that will look like this:

Keep in mind that this is the specific case for this site. While this login form is simple, other sites might require us to check the request log of the browser and find the relevant keys and values that we should use for the login step.

For this script we will only need to import the following:

First, we would like to create our session object. This object will allow us to persist the login session across all our requests.

Second, we would like to extract the csrf token from the web page, this token is used during login.For this example we are using lxml and xpath, we could have used regular expression or any other method that will extract this data.

** More about xpath and lxml can be found here.

Web Scraping With Python Beautifulsoup Requests & Selenium Udemy

Next, we would like to perform the login phase.In this phase, we send a POST request to the login url. We use the payload that we created in the previous step as the data.We also use a header for the request and add a referer key to it for the same url.

Now, that we were able to successfully login, we will perform the actual scraping from bitbucket dashboard page

In order to test this, let’s scrape the list of projects from the bitbucket dashboard page.Again, we will use xpath to find the target elements and print out the results. If everything went OK, the output should be the list of buckets / project that are in your bitbucket account.

You can also validate the requests results by checking the returned status code from each request.It won’t always let you know that the login phase was successful but it can be used as an indicator.

for example:

That’s it.

Full code sample can be found on Github.